Automated Medical Diagnostics Through Image Classification - The Tremendous Impact On Patients And Medical Providers

Oumnia El Khazzani

about 7 years ago

Abstract

This article reviews a catalogue of 108 automated diagnostic tools available for use that rely on AI-driven image classification, and then proceeds with a discussion around the implications for patients and providers. Radiology use cases are the primary focus. Initially brought to market as computer aided detection, the generation of these tools developed over the last 5 years has meaningfully better performance from applying advances in deep learning models to image classification.

Computer aided detection.

It is springtime for the automatic diagnosis of health issues, with green shoots powered by advances in genomics, cell & gene biology, and artificial intelligence sprouting up across the medical landscape.

In this article we’ll take a look at how advances in image classification are being applied to medical images to make partial, and in some cases, complete, diagnoses.

Computer aided detection has been used for over a decade as one of the early areas of digital health. What is new are the dramatic improvements in performance. Advances in artificial intelligence have allowed improvements to computer aided decisions that are bringing their diagnostic capabilities to human levels of performance and, in some cases, superhuman levels.

The trend is evident that more and more conditions will be able to be diagnosed computationally. There are many implications for patients and providers - direct to consumer models, early diagnosis, and redefining primary care - to throw out a few. Through early diagnosis, technology advances will allow the overall approach for the way medicine is taught and practiced to evolve from symptom-based to preventative.

For the devices covered here, I considered software that uses imaging as model inputs - mainly CT, PET, MRI, X-Ray, and UtraSound. In subsequent articles, I will review other modalities used for automated diagnostics - biomarkers, genomics, and health records, but to avoid boiling the ocean in a single pass, I am looking at only a subset of the diagnostic techniques now, namely software models which take images as their input.

Keep in mind that in the context of computational solutions, a device is a piece of software, and that governments around the world have approved numerous software solutions as medical devices. As part of this article, I’ll also be referencing and sharing a catalogue I created for care providers to be able to to identify available products that enable patient care improvements, https://www.automated.health.

First, I will take a second to frame what AI image-based diagnostics means.

How is predicting something with an AI model different than other ways to predict things?

The biggest difference is that AI is a software program that learns from experience.

For contrast, an example of a program that does not learn is an equation that predicts that the cost of a house is equal to the number of bedrooms + bathrooms + lot size. If I feed it more and more housing data, mathematically speaking, the equation’s predictive capabilities never improve. Deep Learning, the AI technique used by nearly all the products reviewed, would get better and better as it is fed more data. That is the distinguishing feature of an algorithm used for an AI model - it gets better as it has more experiences, that is, processes more data.

There are many highly effective rule-based and statistical approaches to making predictions that work well. So, this is not to say AI is better in all cases or will replace existing techniques across the board; there are a handful of situations where AI is better, however, and it turns out image classification is one.

For an an example of how image classification works that most people will be able to relate to, consider a social media site, perhaps one with over a billion active users. Years ago, if you wanted to tag people in pictures, you needed to do so manually. This allowed the social media giant to build a huge dataset - what we consider a training set in AI - of labeled images. They were able to use this dataset to train models that could recognize pictures that had faces in them. If you’ve been a Facebook user for more than 5 years, you may remember the switch when you stopped positioning crosshairs over faces and tagging people, and small boxes started appearing for you to fill in the name of the person. That’s the moment when their model became good enough to automatically detect faces.

Getting back to the healthcare setting, for the image classifiers for different conditions to be trained similarly requires annotated images. Instead of pictures annotated with the location of faces, the x-rays, MRIs, CT Scans and other images used to train medical classifiers are labeled with the condition and a bounding box. The key requirement is to have a fair number of images with the aspect you are trying to automatically detect and label, for instance, a set of chest x-rays with cancer nodules outlined.

Review of solutions and conditions

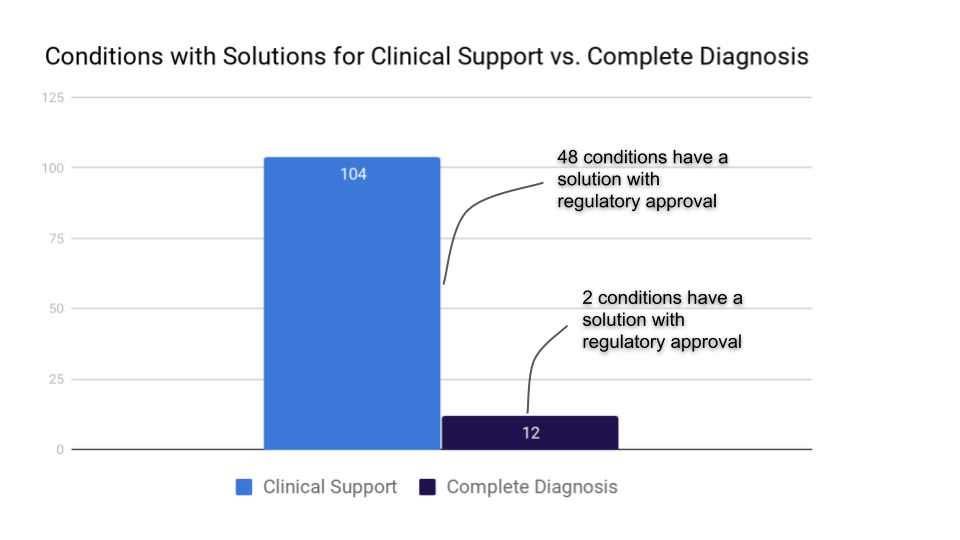

Let’s jump into a review of the available solutions. Slightly over a hundred conditions can be detected by software with an AI driven image classifier at its core. If you are curious to see the complete list, follow this link. Of these conditions, 12 have a solution that can provide a complete diagnosis, which you can explore via this link.

A fraction of the approaches that are able to generate a complete diagnosis have received regulatory approval: a bone age assessment in South Korea and diabetic retinopathy in the United States.

The remaining devices provide a complete diagnosis, but have not received approval and are for research or clinical support use only. Complete Diagnosis used here refers to a software program that takes image inputs and generates a diagnosis which does not need to be reviewed by a physician, whereas software meant for diagnostic support is intended to assist a clinician, but not determine a specific condition without the clinician's review.

To see what an automated complete diagnosis looks like, let’s take a look at an FDA approved device that can automatically diagnose diabetic retinopathy, IDx-DR. The device can be run by a technician who “requires minimal operator training” and it produces a diagnosis in under sixty seconds. Based on the diagnosis, the recommendation for the patient to follow is either: “Negative for more than mild diabetic retinopathy: retest in 12 months.” “More than mild diabetic retinopathy detected: refer to an eye care professional.”

In this process, physicians do not need to be involved in administering the exam or in the diagnosis.

The majority of the solutions that provide computer aided detection are meant for clinical support use, with a final diagnosis needing to come from a physician. Based on the research being done within hospital systems and academics, I would anticipate the list of conditions with clinical support to steadily increase, and as training sets are expanded and algorithms improve, a steady flow of products going from clinical support to being able to provide a complete diagnosis.

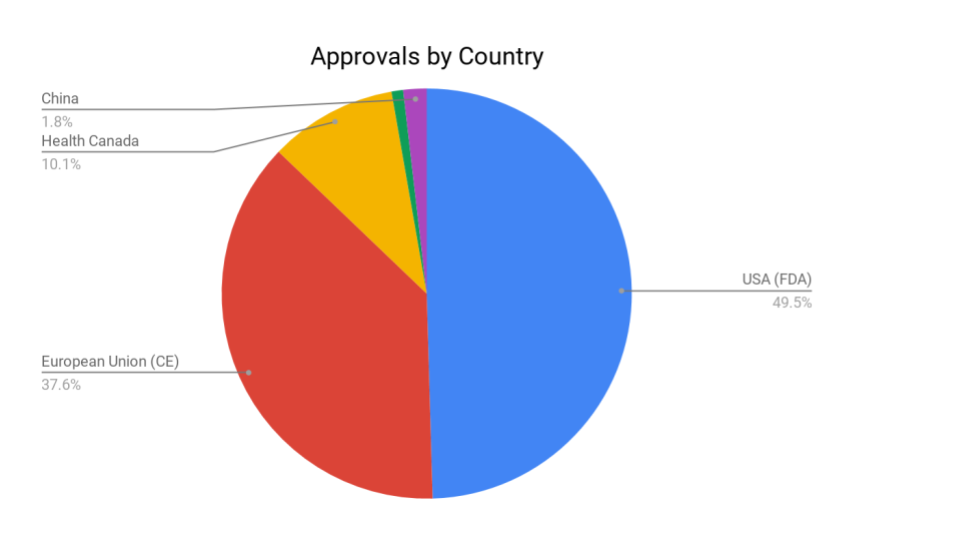

The 100+ conditions that have a software medical device that can be used for clinical support are built by roughly 50 organizations. 25 of those firms have received regulatory approval in some jurisdiction. Of these devices, the US FDA has approved 54, the European Union 41, with Japan, China, Canada, South Korea, and Australia approving a smaller number.

The US and Europe together account for the largest portion of approvals granted, followed by Canada and China. The data, I suspect, is not truly representative, as we’ll see from below that China is in many ways much more advanced in their development and deployment of automated diagnostics. I’ll touch on this more, but lung cancer screening, for instance, has been rolled out across nearly 280 hospitals in China (link) - a deployment figure no other country is close to.

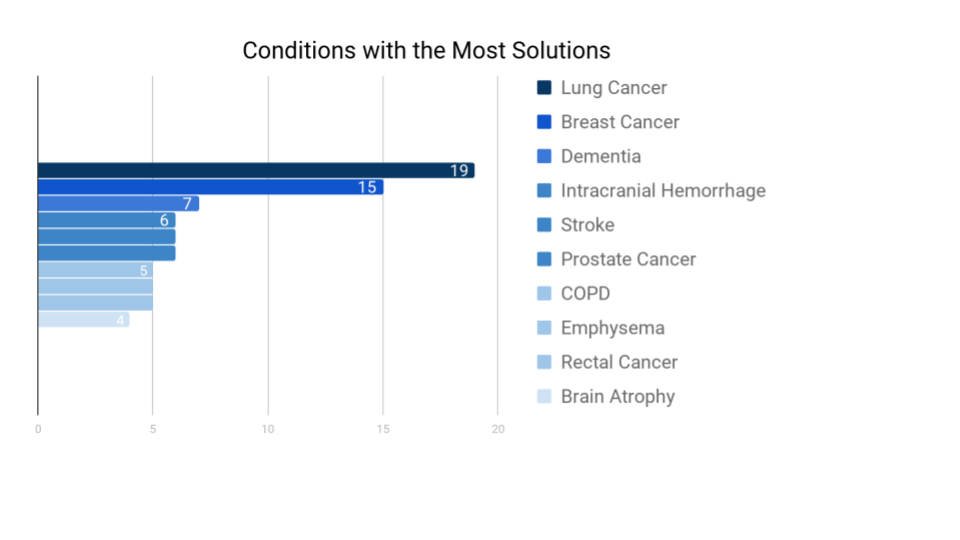

There is a clear focus on lung and breast cancer, with a collection of other conditions receiving focus from a half dozen companies before the solutions per condition drop into the low single digits.

Implications for care providers and patients.

What does it all mean? While the absolute number of conditions that have an automated diagnosis is low, from published research, the number of computational diagnostic efforts underway in hospital systems, and the number of clinical support solutions on the edge of attaining a performance level needed for automated diagnosis, I would anticipate a steady expansion of clinical support and complete diagnosis solutions entering the marketplace in 2019.

There are many - I think mostly positive - implications for healthcare as the performance of models improve and the coverage of conditions that can be automatically diagnosed expands.

Rural populations with low physician-to-patient ratios stand to benefit immensely. We are in fact seeing this in China, driven by need intersecting with technology capabilities.

Infervision chest x-ray has been deployed in 280 hospital systems.

Ping An in china has installed ‘One-minute Clinics’ that use conversational AI to gather symptom and illness history information and provide a preliminary diagnosis. I would imagine image-based diagnostics are coming soon to the kiosks.

The nature of an automated system is that there is a low marginal cost to run prior records through diagnostic models. This means that, as new products become available and existing products advance, a provider can re-run images from patient records. As one example, if a diabetic retinopathy software solution gains a material performance boost, a clinic could re-process the images on behalf of patients. This course of action isn’t realistic with humans making the diagnoses. There’s not a feasible way for a hospital system to have radiologists re-review the tens of thousands of mammograms, x-rays, brain scans, chest x-rays, and other images that are in patient health records.

For software, though, it is a straightforward engineering project to take additional passes over the images.

From a November 2018 publication by the Radiological Society of North America, due to missed or misdiagnosed abnormalities, 20% of newly diagnosed cancers and almost 30% of interval cancers go undetected. This is an opportunity for automated diagnostics to step in. With no additional imaging taken, a hospital could run patient images through one or more of the existing solutions that provide clinical support to flag images for re-review.

Of note, while early detection and more accurate screenings will be possible, treatment plans remain a complex issue. Some instances of a disease advance rapidly and warrant treatment, while other instances may advance slowly enough that the person will die with, not because of, the disease. Depending on the ramifications of undergoing treatment, it is often advisable to live with the condition.

Primary Care physicians will be able to diagnose an increasing number of conditions. The types of services and range of care that primary care clinics are able to offer will change with advances in automated diagnostics. Many of the services that traditionally required specialists to diagnose will be diagnosed by generalists, as is possible with diabetic retinopathy.

Direct to consumer solutions are entering the marketplace. In the dermatology field, a European company, SkinVision, uses AI to check for signs of cancer from images taken with a person’s phone via their mobile app.

The solution is consistent with dermatology research from Stanford where researchers achieved dermatologist-level classification of skin cancer, as discussed in a paper in which “An artificial intelligence trained to classify images of skin lesions as benign lesions or malignant skin cancers achieves the accuracy of board-certified dermatologists.”

Founded in 2011, the SkinVison application has over a million users worldwide. Using this and similar technologies such as SkinIO’s self exams - people without access to dermatology clinics can get screened.

It seems plausible, with this type of comprehensive and easy to use early detection, that late stage skin cancer could become a very rare progression. On the healthcare system end of things, as a clinic generating a portion of revenue from initial screenings, they will need to adapt to a world in which their patients are mostly in need of biopsies and treatment, and less on screening services.

AI powered full and partial diagnoses will support a general trend towards healthcare delivery outside of hospitals.

As an example, the latest Apple watch includes an ECG app that can record the user’s heartbeat and rhythm as well as generate a report to share with a physician.

A variety of other vendors are also working on digital biomarker solutions, such as Biofourmis and iRhythm. Bifourmis, which is FDA and CE approved, captures patient health data from wearable sensors and populates electronic health records with this information for clinicians to review.

iRhythm offers a wearable sensor that captures and analyzes heart data through their FDA approved device, the Zio Patch. As a sign of the advances that automated diagnostics are making, in a project involving Stanford and iRhythm, researchers built an AI model that was able to perform at a human level for annotating a dozen types of arrhythmia. You can read more about the findings on mobihealthnews in which they go into more details around the group’s development of a software program that performs on par with board certified cardiologists.

As a final word, in narrow tasks such as the diagnostic solutions covered here, AI performs very well, but as Yoshua Bengio said in a podcast on The AI Element, “[AI] is like an idiot savant” with no notion of psychology, what a human being is, or how it all works.”

We’ll need talented healthcare professionals to provide context for these narrow solutions for the foreseeable future.

Written by Trevor z Hallstein, Healthcare Product Lead at Swish Labs